MLOps: deploy and maintain a machine learning model

Introducing a machine learning model in your business process

It’s one thing to create an AI solution, but it’s another to start using it and reaping the benefits. While many training courses are available to help you get up to speed with the former, ML2Grow is one of just a handful of companies with proven experience in helping you get the most out of an AI system.

Introducing an AI solution requires a wide range of skills and services and more than just model building and data science. The success of the solution depends on effective deployment of services, hosting of models, the introduction of health monitoring, the creation of efficient data pipelines and the design of accompanying software and hardware. ML2Grow has a team of experts who all play a role in the overall success of a solution. Non-technical skills are equally important for the success of a project. For instance, a business problem needs to be correctly translated into an appropriate technical solution. This makes it easier to adopt AI in the workplace and transfer knowledge. We have put methods and processes in place to guide this process.

When a model has been successfully deployed into production, it still needs monitoring. This is because unexpected changes, such as a new geopolitical conflict or unidentified objects on images, can suddenly stop it from working properly or cause it to become less accurate over time. When this happens, your team is faced with starting again by preparing production-ready data, retraining the model and then cobbling together a script that may or may not help to get it up and running again.

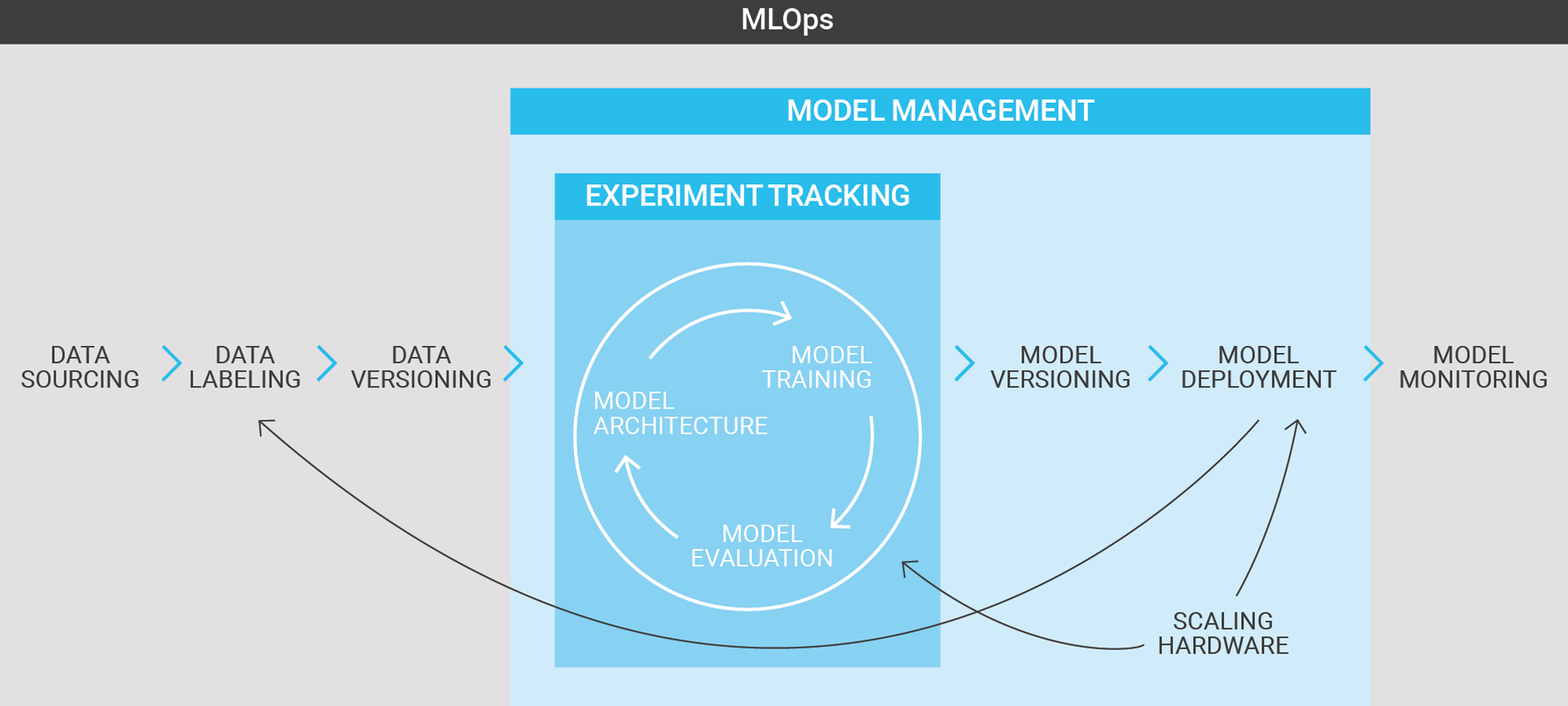

However, there is another way. Machine Learning Operations (MLOps) solves these problems by putting into place tried and tested approaches to data management in a repeatable framework for developing, testing and deploying AI models. MLOps makes it possible to deliver new models like any other type of application.

MLOps is a fairly recent term and encompasses a set of best practices. These allow AI algorithms to be efficiently and robustly introduced into operational systems. These best practices can be divided into design, model development and operations stages.

Design

In this stage, we focus on understanding the business and how the technical solution we introduce will impact the workflow. ML2Grow’s AI roadmap is a good example of how we structure solutions. It is important not to lose focus on how the end solution will impact the business process. That is why we need to constantly ask ourselves the following at each stage: “how will things be done differently when the AI solution is in place?” We also calculate the return on interest, determine the involvement of stakeholders, consider the scope and design, and start the development plan.

Model development

In this stage, we create the AI component. This involves identifying and transforming data, creating features, selecting a model and training and evaluating it. We carry out these processes iteratively until we have achieved the business goals. We use rapid application development tools to speed up this manual process. We also use MLOps tools to keep track of the models, experimental results, parameter settings and datasets used.

Operations

In this stage, we devote all our attention to bringing the model into production. We monitor the model and detect significant risks, such as model drift. We also implement strategies and tools to automatically retrain and deploy new models. This involves data engineering expertise, but also interaction with the environment and sensors.

We always stress the importance of creating a production-ready repository where the models can be stored and setting up a model registry to keep all of the metadata for the model. Bringing machine learning into production is extremely challenging. We know this from experience, as we are constantly launching ground-breaking AI products for our clients. After delivery, we take care of the hosting, maintenance and support of the system. However, before a model is deployed to production, the client has 3 important decisions to make.

1. Hosting

Most machine learning models are used in ‘batch mode’. This minimises computational time and reduces data source dependencies. The models can also be used in real-time, but this requires dedicated computing and data services and significantly adds to the cost.

For certain use cases, such as computer vision, we embed the machine learning models in devices. In other use cases, cloud computing may be the most cost-effective solution. We offer a full-service package for our AI models. This means that we take care of deploying and scaling the model, and we make the entire experience worry-free for our clients.

2. Maintenance/support

As computer users, we are all familiar with security updates and other updates for efficiency or new functionality in our operating systems and software. Like any software, AI also needs maintenance and support. Our full-service package includes these types of services to give our clients full peace of mind. We will ensure that the AI system is automatically updated and secure. If you would like to extend the functionality of your AI solution, or if a problem occurs, we offer various forms of support through our SLAs.

3. Retraining or re-calibration of the model

AI systems need to be frequently updated with the latest information. The system can then be designed to retrain the AI model using this new data without the need for human intervention. We configure the system and carry out quality checks on the new model before bringing it into production. Some models need to be recalibrated less frequently. We offer services for this using our in-house infrastructure. If your model just needs to be retrained, little human intervention will be required. However, if you want to add new features to the model, then this will require more in-depth training.

MLOps as a Service

Artificial intelligence (AI) is a powerful technology that can be used in a variety of use cases. However, each AI model is only able to solve specific, narrowly defined tasks (e.g. detecting known defects, sales forecasting). The model also needs to be retrained over time so that it remains relevant, just as we need to recalibrate any digital tool to keep it accurate.

The sheer power of AI systems to discover tiny, hidden details in vast datasets means that they need dedicated computing and storage infrastructure to run smoothly. The algorithms in the systems also need to be constantly monitored by humans, as they can only ‘think’ within certain, narrow limits. Let us explain that a little. Our physical world is changing at a rapid pace and becoming increasingly interconnected. As a result, AI needs to quickly detect patterns. However, this interconnectivity also paradoxically poses a risk for existing AI models when events are not accounted for in the data (i.e. only the symptoms are reflected in the data). That could be because the events have occurred in a wider context, such as a global pandemic (e.g. COVID-19 crisis) or a geopolitical crisis (e.g. the war between Ukraine and Russia).

At ML2GROW, we fully understand that not every company has the resources to invest in specialised infrastructure and AI experts (unless they are a multinational such as Google or Facebook). However, we firmly believe in the power that AI puts at the fingertips of companies to enable them to identify new insights. That is why we offer ‘MLOps as a Service’ for companies that want all the power of AI, but without the burden of operating and maintaining it themselves.

Deploying a machine learning model can sometimes be a headache. Your IT or data scientist team will get stressed from time to time because your model crashes. It seems to take aeons to set up the system, you do not have version control, and you cannot scale the capabilities of your AI system. You may feel like you are lost in a dark tunnel but fear not. Our MLOps as a Service means you will be up and running in no time at all. We will fully set up your infrastructure (at ML2GROW or via Google Cloud) and get it ready for you to use. We will semi-automate your deployment pipelines and neatly integrate your development. We will then make sure that your data is cleansed, validated and prepared for the next round of training. All that remains for you to do is to get started with exploring your AI.

Our package offers you full peace of mind and includes the setup, management and maintenance of your infrastructure. This leaves you free to focus on your daily operations, boosted by the power of AI.

Our MLOps as a Service comes with two services but without twice the cost.

— MLOps platform

— In-house MLOps team